The unofficial strange AI benchmark list is growing.

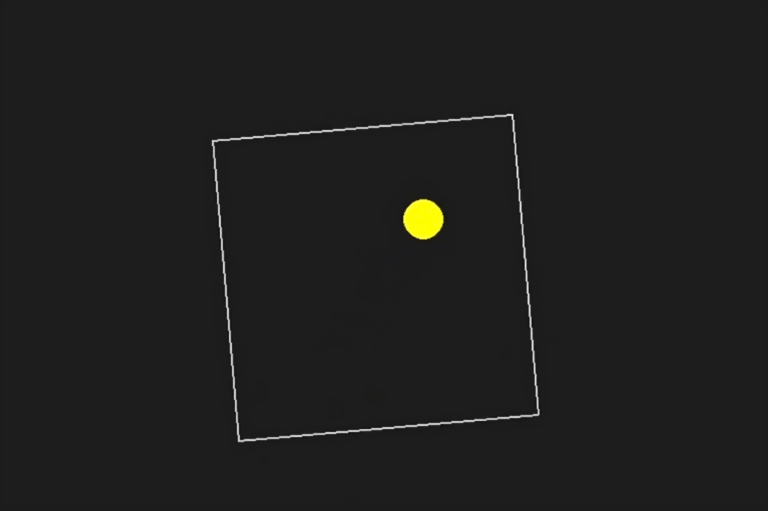

For the past few days, some of the X’s AI community was absorbed in testing how various AI models, especially the so -called inference models, processing such a prompt. 。 Rotate the shape slowly and make sure that the ball remains in the shape. “

Some models are better managed with this “rotated ball” benchmark than other models. According to one user of X, R1, which is freely available in AI Lab Deepseek in China, has swept the floor in O1 Pro mode, O1 Pro mode of $ 200 per month, as part of the Openai Chatgpt Pro plan.

👀 DeepSeek R1 (right) Pulverine O1-PRO (left) 👀

Prompt: “Write a Python script to bounce a yellow ball in a square, and process the collision detection properly. Rotate the square slowly. Mounting in Python. Confirm that the ball is a square. Pic.twitter.com/3sad9efpez

-Ivan fioravantiᯅ (@ivanfioRavanti) January 22, 2025

According to another X poster, Anthropic Claude 3.5 Sonnet and Google’s Gemini 1.5 Pro model misunderstood physics, and the ball escaped the shape. Other users reported that Google’s Gemini 2.0 Flash Thinking Expertional and Openai’s old GPT-4O have repeatedly evaluated at once.

Test 9 AI models in the physical simulation task: rotating triangle +bounce ball. result:

Deepseek-R1

🥈 Sonar giant

GPT-4Oworst? Openai O1: I completely misunderstood the task

The first line of the video below = inference model, REST = base model. pic.twitter.com/eoyrhvnazr

-Aadhithya D (@aadhithya_d2003) January 22, 2025

But what can AI can codish or cannot code?

Now, simulating bounce balls is a classic programming task. The accurate simulation incorporates a collision detection algorithm. This tries to identify the time when two objects (such as the ball and shape side) collide. An unwritten algorithm can affect the performance of the simulation or a clear physics mistake.

The X User N8 program is a resident of AI Startup NOUS RESEARCH and it took about 2 hours to program the rotating corps ball with zero. “It is necessary to track how the collisions in multiple coordinates and systems are performed and design the code to be robust from the beginning.”

However, bouncing the ball and rotating the shape is a reasonable test of programming skills, but they are not very empirical AI benchmarks. Even the slight fluctuation of the prompt can get different results. For this reason, some of X reports that O1 has more luck, but other users say that R1 is lacking.

If anything, these virus stests indicate that it is difficult to handle a useful measurement system for AI models. It is often difficult to tell something that distinguishes a model from another model outside the esoteric benchmark, which has nothing to do with most people.

As in the ARC-Agi benchmark and the last exam of humanity, many efforts are underway to build better tests. We look at those fare -and in the meantime, the ball GIF bounces in a rotated form.