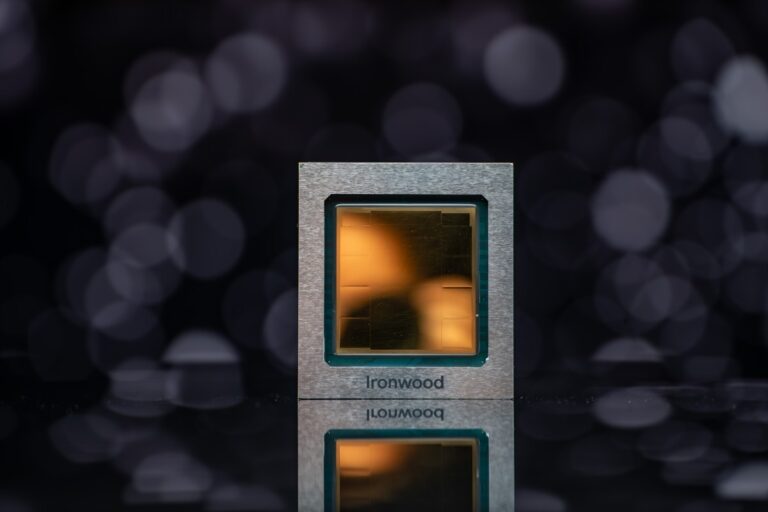

At this week’s cloud next meeting, Google announced the latest generation of TPU AI accelerator chips.

The new chip, called Ironwood, is Google’s 7th generation TPU, and is the first optimized for inference. That is, it runs an AI model. Expected to be launched later this year for Google Cloud customers, Ironwood comes in two configurations: a 256 chip cluster and a 9,216 chip cluster.

“Ironwood is our most powerful, capable, and energy efficient TPU,” Google Cloud VP Amin Vahdat wrote in a blog post provided to TechCrunch. “And it is intended for a large-scale inference AI model, reasoning thinking.”

Ironwood arrives as AI accelerator space competes as it heats up. Nvidia may lead, but tech giants, including Amazon and Microsoft, are pushing their own in-house solutions. Amazon has training, recommendations, and Graviton processors available through AWS, and Microsoft hosts Azure instances of the Cobalt 100 AI chip.

Ironwood can offer 4,614 TFLOPS of computing power at peak times, according to Google’s internal benchmarks. Each chip has 192GB of dedicated RAM, with bandwidth approaching 7.4 Tbps.

Ironwood has enhanced SparseCore, a specialized core for handling common data types with “advanced ranking” and “recommended” workloads (e.g. algorithms that suggest apparel you might like). The TPU architecture is designed to minimize data movement and latency-on-chip, which saves energy, says Google.

Google plans to integrate Ironwood with AI Hypercomputer, Google Cloud’s modular computing cluster, Vahdat added.

“Ironwood represents a unique breakthrough in the age of reasoning,” Vardatt said.